Hello hello everyone! Welcome to another Month in Review! This one has been a blur, and yet incredibly slow at the same time. Though, that’s been happening a lot for me lately. Could that just be normal?

-stares off into oblivion-

Well beside that, let’s get to this month!

I broke more ice in community development work!

Before I get after Genome Studios stuff, I ended up breaking the ice in updating O3DE public documentation in relation to the UI component I ended up publishing last month. From here on out things like this become easier and easier! I think it’s pretty damn cool. I already have my eyes on another little addition to make down the line.

Here it is in all its glory. Boom: https://development–o3deorg.netlify.app/docs/user-guide/interactivity/user-interface/components/interactive/components-interactivitytoggle/

Alright back to the work part.

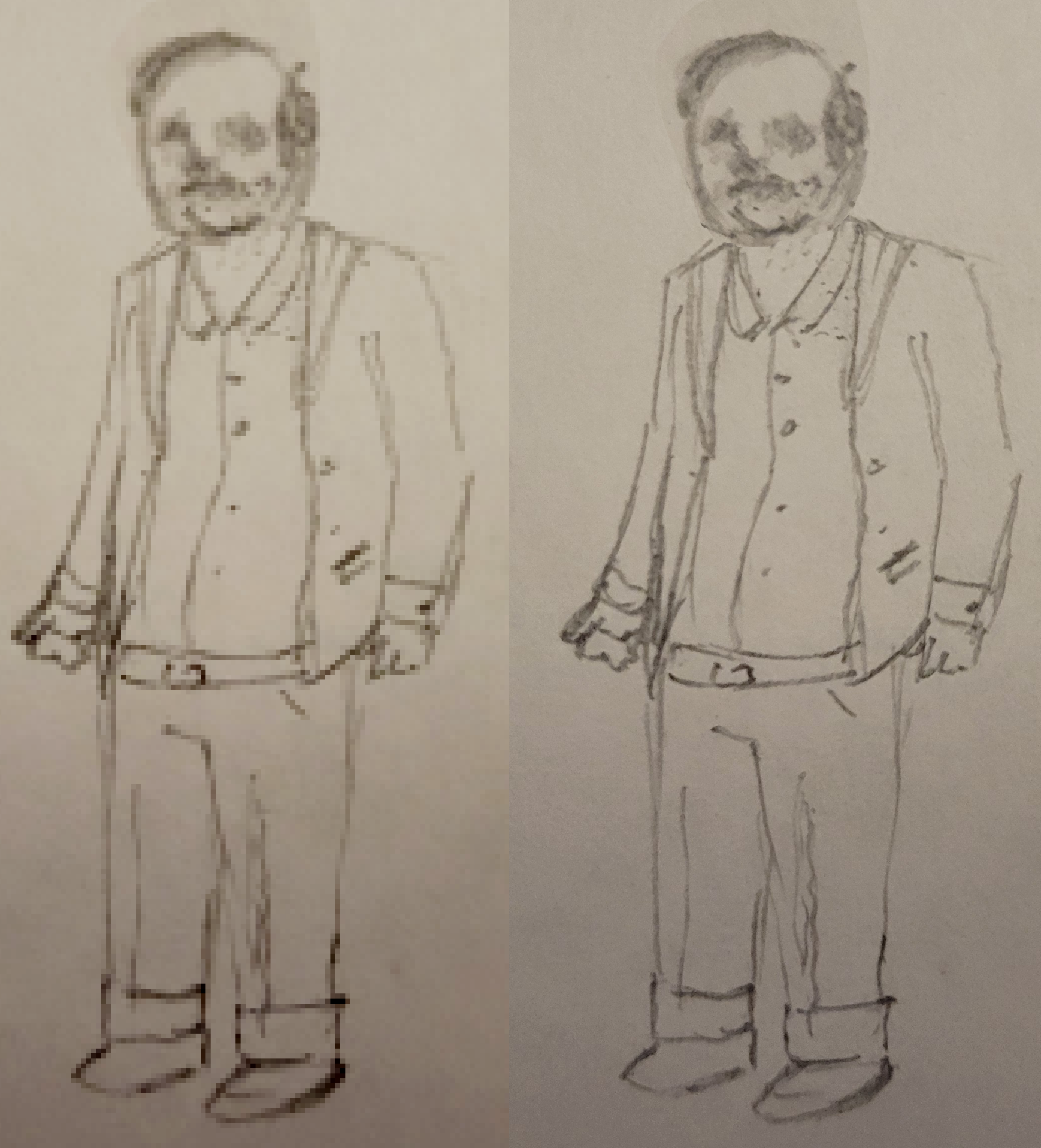

I continued the thread of concept arting from the end of last month and tackled the rest of the general town environment art. I started slowing down considerably in my motivation to keep chipping at art. I did manage, however, to tackle the first draft of our main character, Jorje.

This has set the specs for how much detail and form we will be using for the characters throughout the project. I think it’s a nice line between detailed enough to convey a lot of character in just how the character looks, while still maintaining the ambuguity that leaves the player filling in the gaps. Especially significant in the dreamlike style of presentation I broke into with Del Lago Layover. If you didn’t know, a signature aspect of dreaming is it’s often incredibly hard to have facial recognition/detail. You usually just “know” who the person is, or what their context is in the plot of your dream.

Then I hit arting bedrock.

I started procrastinating super hard on the art/concept stuff and was tackling other things while I tried to warm back up to it. I didn’t. I remained cold as ice. So, after the first week of procrastinating and feeling guilty about it, I just let go and let myself do other things, purpose driven instead of guilt driven. Pivoting and being agile in development can touch on MANY layers of one’s experience and the project at hand. One way or another EVERYTHING necessary for the game needs to be done, and if it just isn’t in the stars for x, y, z, particular element to be made manifest at this exact point in time, might as well tackle the things that can be.

Slowly we‘re creating our game project’s world.

This month, I ended up working out some tasks with my one colleague to start importing trees, rocks, and other natural assets, along with interior human assets for the game to start being filled with detail. He got into the flow of it, and even broke out some scripting to automate some of the process! Killin it.

We’ve still got some more laborious importation work to do, but it’s exciting to see that it can even happen at all with the assets I’ve got at hand.

Alongside that we’re working out how to create post processing render layers in the O3DE system in order to create some critical layers for the presentation of the project. Very exciting to be treading into the render systems and make out how any of it works. It’s a very powerful and comprehensive system, but that also means it’s no walk in the park to just pick up.

Speaking of world building…

Early in the month I ended up volunteering to show off the GS_Play suite of tools, as they stood right here and now, for the monthly O3DE Connect presentation. What that featureset is is a rapidly moving target, but it’s always valuable to put it out there when there’s opportunity to show it off.

This ended up in me scrambling for the first half of the month to get features more together and stable. Getting it to SOME form of presentational stability.

It was a blessing in disguise, because it meant I was fussing around frustrating, boring things that were nagging at me, but I was putting off due to wanting to tackle bigger, broader things. (To the bane of what a producer is employed to make sure doesn’t happen.)

Producers:

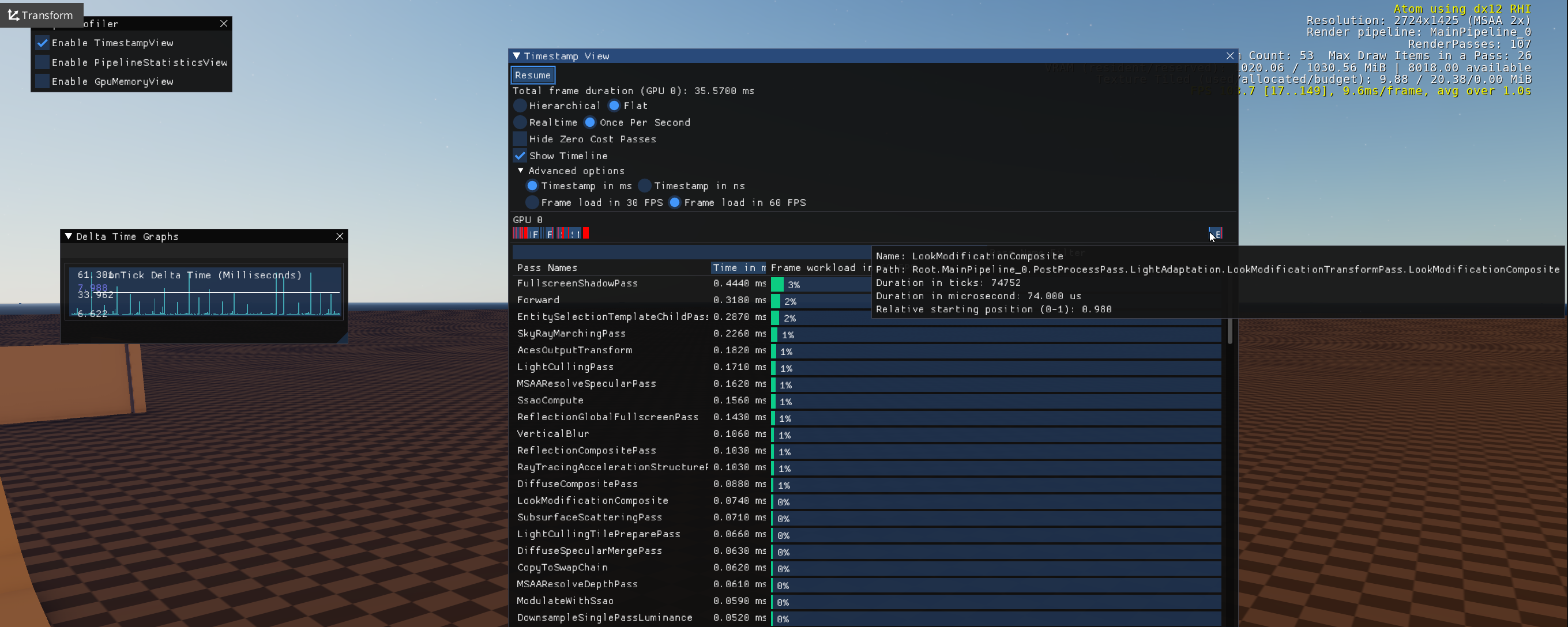

I ended up doing my first diagnostics of our work and the O3DE systems using the imgui tool they have built into the engine. I was quite intimidated by what the system is and how it works, but it was actually very powerfully and competently implemented for the kinds of diagnostics I was looking at.

I got to see the various processing demands over frames, monitor the delta time as the game was running in the editor. Lots of stuff. I am not someone that loves diagnostics and optimization as a sole responsibility, but I definitely find looking over my work and seeing how performance is happening to be very cool.

Beyond that I did some fixes to the camera system when it comes to initial startup and missing certain values necessary to reposition to it’s targeted positioning. Due to that little fussy thing the camera would always start locked at 0,0,0 looking right up the guardians groin until I moved and filled the values/cached values with data. Bringing the camera delicately to its starting point. What a relief. It’s never enjoyable seeing a glaring issue like that EVERY time you start the playmode.

Yeah… uh… m…

I implemented some target features I was getting at, but were DEFINITELY necessary for a showcase at this stage of the project.

We got camera fields to change the primary phantom camera as you navigate the level.

I chained together some world triggers and world stuff to show off how simple it is in a design front to lace complex layers of triggers together to arrive at a desirable interactive world.

And lastly, I put together a really raw scene and 10 minute presentation to show off what was there. It was a conflicting feeling, as if literally this month had happened before this particular presentation, I would have had a LOT more to show off in a much more “grounded in a game” kind of format. But then again, I wouldn’t have been stirred by the pressure of the presentation to tackle the particular parts of the framework that I did.

/shrug

But here it is! My O3DE Connect presentation!

Rolling back to the cool stuff we tackled this week that would have been presentation worthy…

I started chipping away at our “Town” level in the We Don’t game project. At the time it was just me procrastinating and doing something “fun”, instead of flat out not working at all, but it ended up being very valuable to have done!

I tackled some much needed development features:

Debug Startup.

One aspect of the debug startup features was loading the player at target points in the level so that when you’re testing, you can place yourself literally in front of the target thing you are building and testing. That SIGNIFICANTLY improved the time spent in building and testing things in the level. The first of many debug features we will be making, but a really subtle yet impactful one to have.

Handling this startup feature also drove me towards formalizing our level changing featureset as the repositioning of the character to a point originates from that system. This forced me into a LOT of stabilizing of systems. C++ is a very particular beast, and even a single missed reference when destroying an entire level and all its systems will make you crash. Something you need to staunch as soon as possible before you create a rats nest of code that can’t survive the scrutiny of basic game systems.

For the work on the level itself, I was ekeing out the unspoken subtle aspects of building a game and it’s world: Scale, speed, and perspective.

Cinematography in any medium is a very interesting thing, as like with programming, it’s rarely a matter of “if” something is possible, but “how” you’ll do it to solve the needs this time, here and now.

When you change the scale of things relative to the camera the world looks bigger and more immersive (but sometimes too big), or too small and tiny. As you adjust field of view you can make the exact same points of views as moving the camera closer or farther away, when you have the camera lower, or higher, tilted downward, or more forward… All these things can affect the same few layers of perception, but all in subtly different ways. Finding that sweet spot is a fussy complicated cycle of changing one aspect, feeling it out, re-changing it or changing a different aspect, feeling it out, etc. Repeated ad nauseam.

But despite the fuss, arriving at a place where the details finally synergize and complement eachother, where you suddenly feel like the world and the player character are actually grounded together, is really, really satisfying.

As part of this fuss, I added movement fields which, like the camera fields, change the movement numbers for characters when they enter the field. This is commonly used to change how the characters behave indoors and outdoors. On top of the speed changing, I ended up implementing speed-change-blending. The need to ease into a new speed when the value changes. In this case it’s from movement fields, but it can include sprinting and other things like that. Having the character suddenly change to a higher speed on a dime does not look or feel too good. Smooth it out. Fuss fuss fuss.

While I love tackling those sorts of features, as it enriches how one builds environments, I’ve built and rebuilt similar systems forever. Literally, the reason for making this gameplay framework is so I (hopefully) NEVER have to remake these sorts of things. Not even if some feckless CEO decides to retroactively alter terms of service agreements and harass prominent game studios with Generative AI led licensing confrontations that are half hallucinated…

My first new feature? Track Camera!

Awww yeah. I may not have made it clear, but I LOOOVE camera work and how much impact it has in evoking emotion from the media it’s serving. Much like creating the best selfie, half the work is posing and aesthetic, and the other three quarters is camera angle. If you’re at all working in a medium that involves looking at things from a point of view I HIGHLY HIGHLY recommend studying some cinematography.

But back to the track camera…

This was one of the more challenging things I’ve worked on in a while. I was touching on many unknowns with this one and it definitely tested my mettle. I had to utilize splines: a mathematical line that you measure a point on and find where something would be based on that line. Target tracking. And features around how the camera will work over the spline’s distance.

It was a mucky messy ordeal. I tried a few ways to do certain things, but, much like the world building itself, each feature did nearly the same thing, had their own shortcomings, and ultimately didn’t result in any better an outcome than the last. What it boiled down to is finding how I would use the system in a way that shines.

After some time I managed to bring it around. The most significant detail being that I made it so the camera actually blends between two settings between the beginning and the end of the camera line. This allowed me to shift the camera’s behaviour and output over the length of the track. That was the secret sauce. Finally things started to look like something purposeful and functional rather than some sloppy, messy prototype.

But guess what? It only rung true a bit after I finished messing with it. I began to scale down the size of the world, as it’d been too overblown, and made the otherwise human looking details feel like a giant’s world.

Only after I tightened the area the track camera was looking over, which brought the character into the frame far more, did the track finally pay off in that final experience.

I ended up working out an additional camera, though I haven’t yet implemented it into the game project. A fixed angle camera: a camera where it follows your target, but doesn’t turn further than its maximum settings. This is valuable in scenes like Resident Evil style games where you look down one hall, and follow the character as they round the corner and go down the next hall. All without clipping through the environment and looking into the void when pushed to it’s maximum angles.

Camera, camera, camera.

Fixed some blending features, stabilized things as best I could. Made camera collision. Every chip into camera features pay back tenfold.

Next in world building? Staging.

Not the end all push towards finalizing the Inn, but blocking out with a more specific purpose of filling the space and making it feel like a place, rather than a world of grey boxes.

This suddenly contextualized the world so much. Things started to feel more present. I mocked up some slightly more detailed placeholders, like tables with legs instead of solid cubes, fussed the scale some more, added colour.

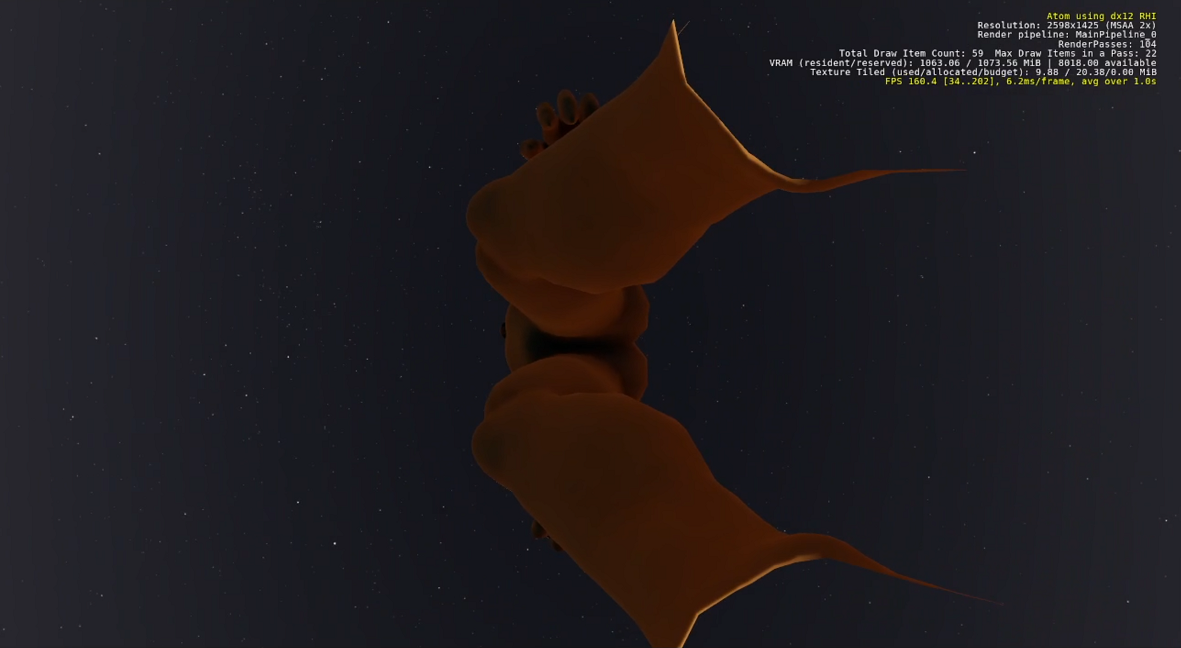

And just to really seal the deal, I added lighting to the building. Wowee.

Taking a quick break to talk about lighting.

Up to this point, I have ONLY used O3DE’s directional light. The light type that’s used for general lighting (often representing the sun).

But, peering into the lighting available when doing actual space by space lighting was beautiful. I come from Unity, and the lighting options are standard, and quite restrictive when it comes to how modern high fidelity/high quality games handle their lighting.

In modern level development and staging, areas are often lit by “spatial” light sources. This isn’t volumetric lighting (a feature of lighting that makes godrays and fog lighting). It’s where you have a shape, like a cube or capsule, and the light emanates from its surface, like a fluorescent light.

If you’ve played FF7 remake, or most modern competitive games with indoor arenas.. or almost any modern game with insides, really. You’ve seen environments lit by these spatial lights. Unlike pointlights, and cone lights (that come from a point), these more modern light types allow for far more uniform and controllable lighting of a space without massive hotspots and dark corners. O3DE is touted as having a very modern and powerful rendering engine, and finally, I see where that’s manifested relative to my field of work. The lighting is future facing, and more than satisfies what I’d ever anticipate needing from lighting. So so so so cool.

So I put lighting in the Inn.

And yeah, I did 2 seconds of work on it and suddenly the interior of the inn is warm and lit in a way that feels like the indoors. The lighting complimented all the blockout work I did, the cameras look nice and present the scene really well. It was finally something that began proving that a game can manifest from the work we’ve been doing. Very rewarding and exciting.

And this is what I finally got, using all those features, world building, and fussing:

So speaking of free community work.

Because of all this initial level building stuff I returned to a need I kept running into: I had to manually implement flat colour materials for every piece of the level that I was colourizing. One night I finally had a mindblast. If my colleague was able to automate creating materials out of assets and their textures, perhaps I could output coloured materials.

So I did.

I pulled up a colour scheme I really found pleasing, and which covers a broad range of colour needs, slowly gathered the hex colour values of each one into a massive list, and then output a massive pool of O3DE materials. Took a millisecond.

So I made a repository and put it out for free!

Here it is: https://github.com/GenomeStudios/O3DE_BasicMaterialColours

When I have some time, I was thinking about expanding it with a pool of primary colours, a set of browns and greys, and emissive materials, to start.

I hope this can boost your prototyping ability, I am sure excited to get back to doing an environment pass and actually using these materials. Of course, I had to come up with the idea after I had finished.

Then, all of a sudden… I got this itch…

An itch to mess around with audio.

I had been circling around all this year on it: If I want to just use an implemented audio system I could fall back on wwise. However, that meant that I’d have to commit to some pretty steep demands.

I could: Pay $7000 upfront to secure a license to use the audio solution for the We Don’t Go Into The Forest project… a project whose size does not reflect plans for income to overshoot that seven thousand dollar mark, let alone hot that mark alone.

Or I could: Take on the royalties licence for Wwise and have to pay a fraction out of our sales for the privilege of using it. Which, of course, piles on to the 30% that steam takes, income tax… other things I’m probably forgetting…

(Actually looks like they have some more accommodating options these days. But still!)

But this is why I chose to take up arms for O3DE in the first place. License free commercial production, democratizing the means of creating videogames to the public…

So I did it. I started up the GS_Audio gem. It was hovering around creation, but I wasn’t ready to commit until now. I don’t know audio software, math, and processing, but I’ll figure out what I need to get audio happening at all. Maybe that can be enough.

I tackled so much of it over a full day of work, only to find out so much of what I had made, with LLM assistance in understanding the tech, was hallucinated. Of course.

I did have plenty of structure and logic and classes there and ready to operate, but the connections and exact moment by moment logic was severed.

I panicked and pivoted. Can’t sit here spinning my wheels for long. I have an entire rest of the game to make.

So I fell back into an easy featureset that needed tending to, but was hardly challenging anything I knew. A day/night time, day, and weather system. The backbone of the GS_Environment featureset. Everything around contextualizing the world in greater detail.

Aside from struggling with certain contact points with the engine, like manipulating the directional light and the atmosphere system, it was a breeze, just a day of chipping away at it until I could input some nice light changing and get a really nice output.

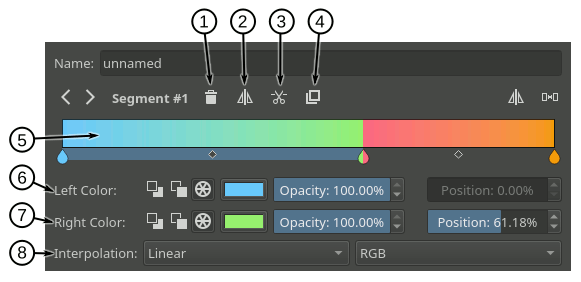

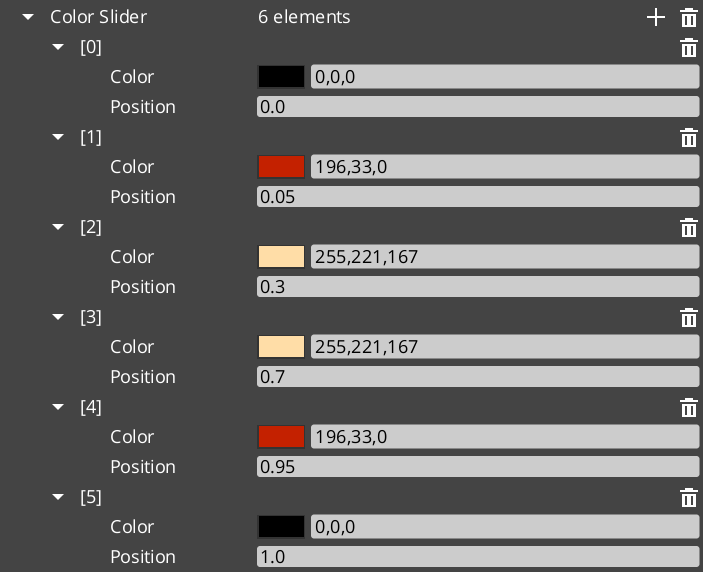

Part of the fuss was actually making a Colour Gradient variable, common to game and graphics software. It’s a bar of colour keys that blend from key to key. You then ask for the colour at a certain position and it gives you whatever that colour would be.

I do not have the GUI experience around making a new element type in the engine so I went for an ugly ugly brute force method. Making a list of colours and where they would be on the bar, and then hoping that the colours will be placed at a good point, and give a good colour blend as output.

It worked! Haha. It’s not pretty but it exists and serves.

I’d love to improve that down the line and add it to the O3DE source code, but for now it’s a GS utility to get our work working.

But here we go. We can set time of day, have time passage. There’s sun and moon. Light colour blends over time. We have some baseline features like triggering a new day, or when day and night change between eachother. It’s bare bones, but pretty well all we need for some cinematic presentation.

I’ve got some weather system work to do on it, once the much anticipated Open Particle System gets marged into the development branch of the source code. But that will have to come later.

With day/night being handled. Making shadows where we want, painting the environment with a rich tone that sets the mood.. I couldn’t resist getting back into the audio work.

It wasn’t as much of a loss than I thought.

At the end of the day of the last audio day I had done some looking though the source code of the premade components already present for using the miniaudio sound library. I had prompted the study simply to refactor my work into using those components and making a system on top of: making countless entities with playback component on them. It was a very ugly implementation, but in my defeat, I wanted at least something.

Here’s where sleeping on things is incredibly valuable.

Getting back to it, I had pieces of those components code hot in my mind and found that if I just clarified my understanding of what they were doing, I could do similar in my already created system, clearing out the mistaken pieces but preserving the very established structure of everything I had done the day prior.

Lo and behold, it was a very clean change, and I managed to make audio happen!

We have:

- Audio events

- Sound pools to randomize for events.

- Event volume and pitch modulation.

- Mixing Channels to isolate certain sounds to certain effects and volume mixing: sfx, music, voice, ui. Those kinds of things anyone wants in the audio options of a project.

There was a bit more lingering after that, but we actually have GS_Audio at all now! That was a huge triumph on my end, as I had no idea if I could do any of what I mustered. It involved reading miniaudio source code and interpreting it into O3DE systems, figuring out 3rd party dependencies and how they get included in projects, being that these outputted things are in the build data instead of a Gem… There was lots to doubt myself with.

Then, after the next pass at audio, I fixed up some of the last issues I had.

We have sound instancing, which allows playing the same sound repeatedly even while it’s being used already. A very important piece of handling sound.

Adding filters and effects to the mixers. A necessary feature for general development, but also to implement the one premade effect miniaudio has: Reverb.

And finally getting the soundtrack pieces implemented. Nothing fully featured, but being able to start one and end one. The reason this was a separate piece is that we actually want to add blending features based on tempo and time signature, as well as blending musical layers in and out while a primary score is playing. Not something every project needs, but a nice target to work towards over future iterations of the featureset.

The GS_Play Feature Hitmap:

We now have a really nice range of foundations established, nearly covering the full breadth of the GS_Play features I have established, relating it to tech from my previous projects, assets, and systems.

- Startup, saving, level handling.

- Dynamic Cameras and camera handling systems.

- Unit, and character input handling. Character movement, grounding, locomotion state changing, movement stat changing.

- Interaction and world manipulation. Using your controlled character, and state of the game, to influence the worlds arrangement.

- UI handling: isolated menu navigation, complex window handling, inter-window manipulation, and partial/fragmented window changing and selection.

- Environmental presentation and contextualization. World time, day and night, sun and stars.

- Robust Sound and music handling, including pooling, randomization, pitch, and effects.

Along with the initial scaffolding around character “performer” systems, entrenched around handling the actor 3D model of your characters in complex and optimal ways.

It’s getting exciting what we will be able to do with just this, and how the features will expand as we build up using the framework.

Of course, we now have fewer and fewer excuses to pivot away from making a compelling and engaging game experience… The hard part… terrifying.

In Conclusion

I hope this month has gripped you as much as it has me! The GS_Play gameplay framework is blazing forward day by day, and we’re becoming increasingly capable of working on videogames. Who would have imagined?

I am excited for each coming month, as we work forward, there will be cooler and cooler material to show off. I hope to see you there!

Here’s to one more.

Want to keep track of all Genome Studios news?

Join our newsletter!